The Rise of AI Video Generation: A Year in Review

As we dive into 2023, it’s essential to highlight that it wasn’t just another year; it marked a breakthrough in the realm of AI video generation. At the beginning of 2023, no public text-to-video models existed. Fast forward to now, and we have seen a proliferation of video generation products, with millions of users tapping into these tools to generate captivating clips from simple text or image prompts.

An Overview of the Current Landscape

While this surge in AI video technology is impressive, it’s worth noting that these products still have room for improvement. Most models currently produce short clips that last about three to four seconds, and the quality is often inconsistent. As users attempt to create more complex narratives or animated features comparable to Pixar, they still face significant challenges in achieving character consistency and narrative coherence.

The Transformation Begins

Despite these limitations, the rapid progress witnessed over the past year indicates that we are merely in the initial stages of a major transformation, reminiscent of the explosive advancements in image generation technologies. Such developments provide a promising outlook for the future, as innovations in text-to-video models and their offshoots continue to gain momentum.

Key Developments in the AI Video Arena

To comprehend this burgeoning field, it’s essential to explore the most significant developments, notable companies, and the prevailing questions surrounding AI video generation.

The Products Landscape

As we assess the tools available today, we have identified 21 public video generation products making headway this year. While familiar names like Runway, Pika, Genmo, and Stable Video Diffusion have emerged, there lies a wealth of additional opportunities for discovery in this rapidly evolving sector.

Many of these innovations originate from startups that initially launched as Discord bots. This approach has its advantages, such as enabling developers to focus primarily on model quality without the need for a consumer-facing interface. Additionally, these bots can leverage Discord’s vast community of 150 million monthly active users for dissemination, especially when featured on the platform’s “Discover” page.

It’s worth noting, however, that as these products mature, many are beginning to establish distinct websites and mobile applications. While Discord offers a springboard for growth, it does impose certain limitations in terms of workflow and user experience.

The Absence of Big Tech

One of the most glaring omissions in this landscape is the absence of major tech giants like Google and Meta from the list of public products. This year, the tech industry witnessed the unveiling of several promising models, including Meta’s Emu Video, Google’s VideoPoet, and ByteDance’s MagicVideo. However, these companies have chosen not to publicly release their offerings just yet. Instead, they have opted to publish research papers and demo videos, leaving unanswered questions about when, or if, these models will hit the market.

Given their expansive user bases, it’s curious why these giants aren’t capitalizing on their traction to capture a share of this emerging market. Legislation, safety, and copyright concerns could play roles in their cautious approaches, but it also opens the door for smaller players to carve out significant market space.

The Uncertain Future of AI Video

As we look to the future, the path ahead is clear: there is much to refine and enhance before AI video adoption becomes mainstream. Users often find that achieving that elusive “magic moment”—where the generated content perfectly aligns with their intentions—requires multiple attempts and edits.

Key Challenges Ahead

For many involved in this burgeoning sector, certain core challenges need addressing:

Control Over Creations: A primary question is whether users can modify scene dynamics effectively. For instance, if prompted with “man walking forward,” can the model accurately depict that movement? While some tools have begun introducing features to adjust the camera and apply effects, the accuracy of such actions remains a concern.

Temporal Coherence: Creating videos that maintain consistency between frames is another prevalent issue. Most current models struggle with this, leading to characters and objects transforming unexpectedly throughout clips. Users find that coherent long-form video output often derives from transforming already existing videos rather than generating entirely new clips.

Moreover, there’s the pressing question of how to extend the length of generated clips. Many tools limit outputs to only a few seconds due to worries about maintaining quality over longer pieces.

The Big Questions Persist

Currently, AI video capabilities resemble the early stages of other technologies, such as GPT-2 for text generation. Researchers and developers are left asking: when will we experience the “ChatGPT moment” for video generation? The reality is, there’s no consensus on what it will take to propel this technology into everyday consumer use.

Can current diffusion architecture effectively work for video? Current models are primarily diffusion-based and typically create frames independently, lacking an understanding of 3D spatial interactions. This often results in undesirable effects like characters becoming distorted or losing their grounding within scenes.

Where will quality training data originate? Unlike text and image content, quality video data is more difficult to come by. Training models on labeled datasets akin to Common Crawl for text or LAION for images isn’t feasible for videos. Licensing content from production companies remains a potential pathway, but it raises questions regarding accessibility.

- How will various use cases segment across platforms? Just like in image generation, it’s likely that different models will excel in particular areas, requiring users to navigate distinct workflows for unique needs.

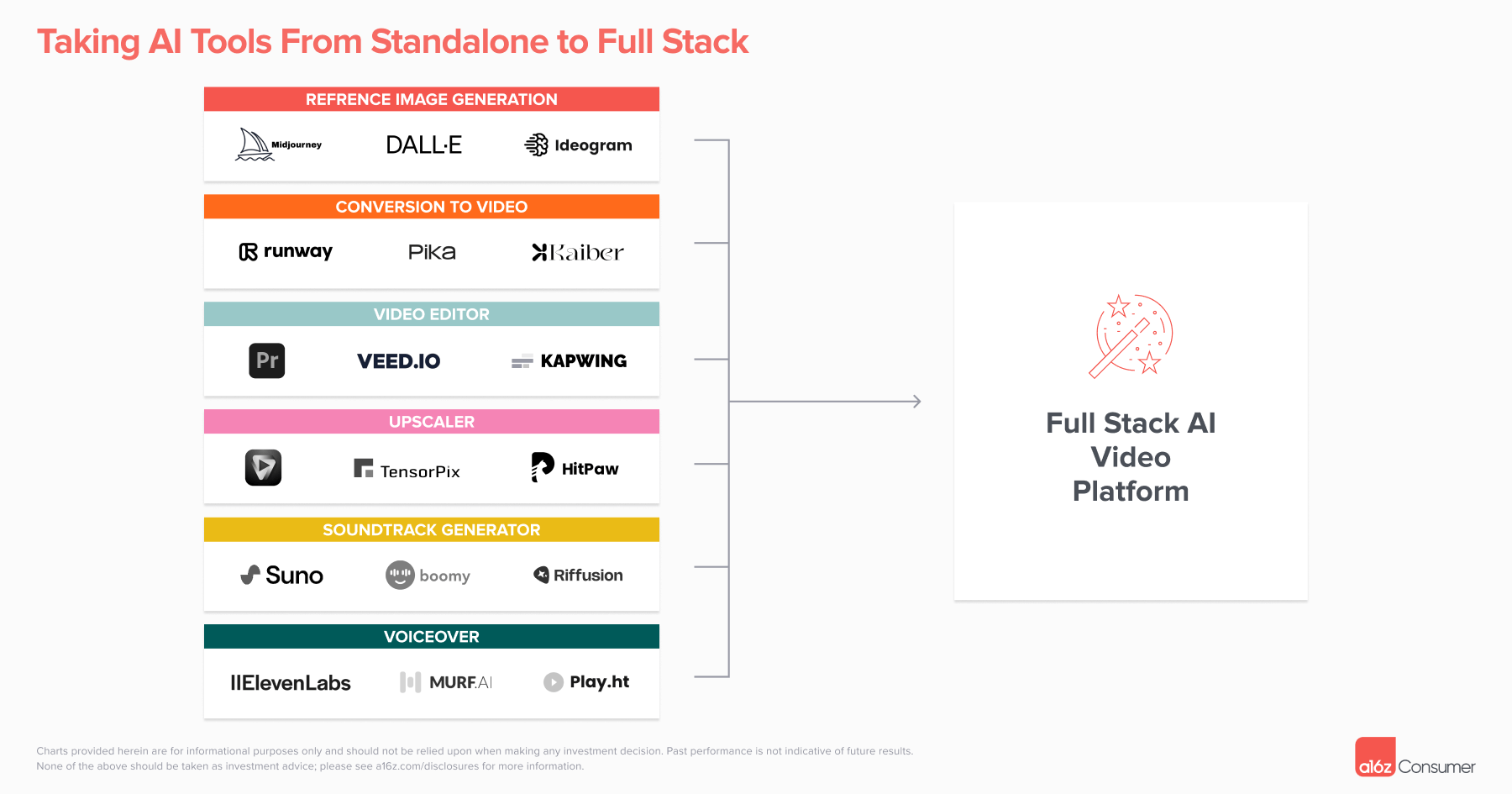

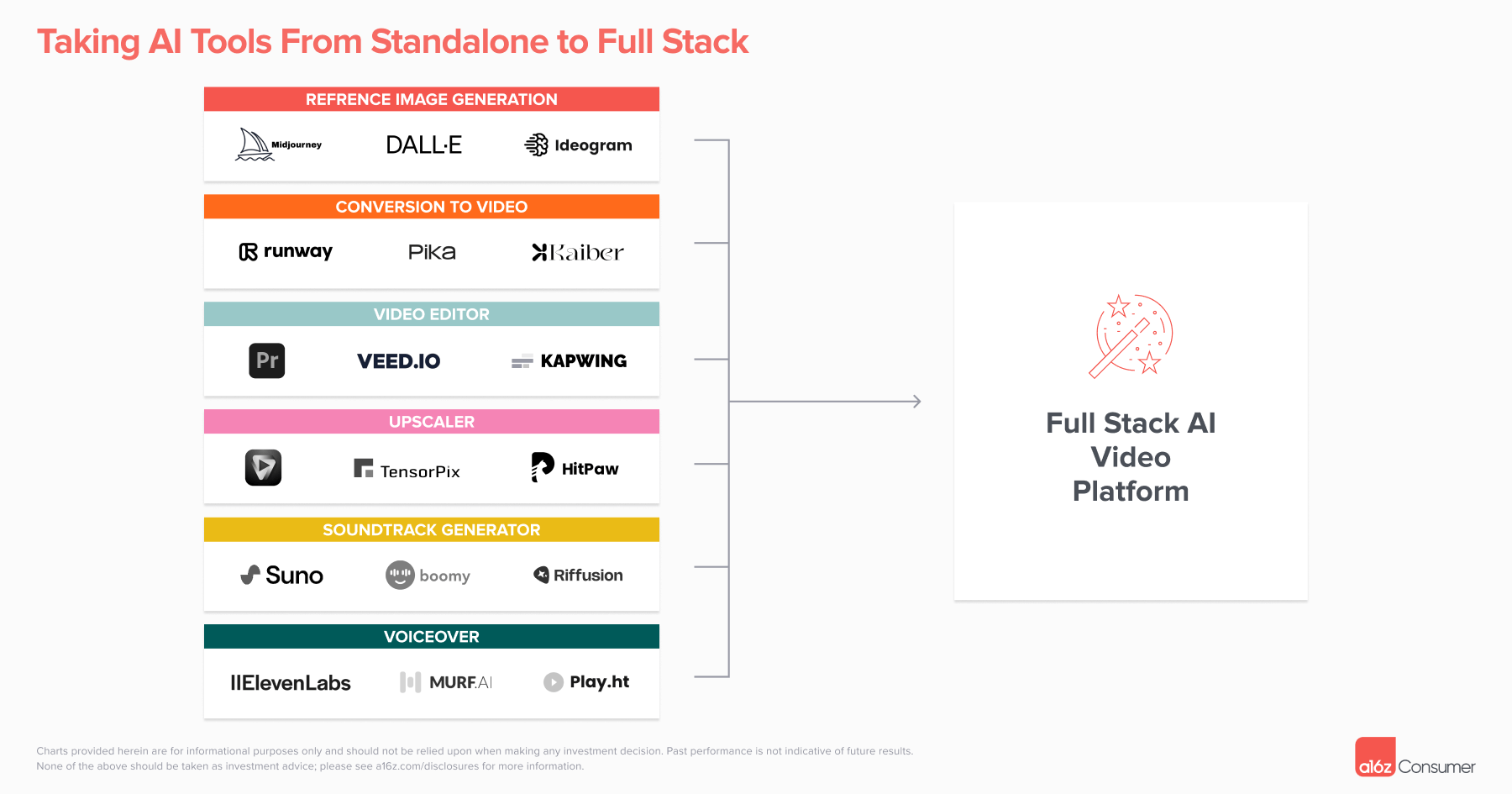

Ownership of Creative Workflow

In the modern era, creating compelling video content often demands skills for editing and collaboration across numerous tools. A singular effective workflow streamlining video creation and editing would be far more beneficial for creators.

Many envision platforms integrating editing capabilities directly within their ecosystems to facilitate smoother transitions between video generation and post-production. The goal is flexibility and ease, minimizing the back-and-forth between various tools.

Conclusion: A Viewing Future Beyond 2023

As we reflect on the remarkable advancements in AI video technology this past year, it’s clear that we stand on the threshold of something groundbreaking. The excitement is palpable, and while today’s tools have limitations, the future is brimming with innovation. As both startups and established companies continue to refine their technologies, the day isn’t far when AI video generation could transform creative storytelling. If you’re working within this dynamic space, your contributions could very well pave the way for the next generation of video production.

For insights and updates on ongoing developments, feel free to connect through various channels and stay informed. Your participation in this conversation is crucial for shaping the future of AI video technology.

source