What happens when innovation outpaces our ability to safeguard fundamental freedoms? This urgent question lies at the heart of global discussions about algorithmic systems reshaping daily life. As governments and organizations like UNESCO push for ethical frameworks, society faces unprecedented challenges balancing technological progress with civil liberties.

The White House Blueprint for an AI Bill of Rights highlights growing concerns about algorithmic decision-making in critical areas like employment, healthcare, and criminal justice. Its five core principles aim to protect citizens from digital-age harms while promoting beneficial applications. Yet implementation gaps persist across industries and nations.

Emerging technologies create both opportunities and risks. Automated tools can enhance efficiency but may inadvertently discriminate against marginalized groups. This paradox demands collaborative solutions from policymakers, developers, and civil society. Recent initiatives demonstrate how ethical challenges of autonomous systems require multilayered governance approaches.

Key Takeaways

- Global institutions recognize the need for ethical guardrails in technological advancement

- Algorithmic systems require transparent design to prevent civil rights violations

- Balancing innovation with liberty preservation remains a central policy challenge

- Cross-sector collaboration drives effective governance models

- Implementation gaps persist between theoretical frameworks and real-world applications

Understanding the Landscape of AI and Human Rights

The intersection of digital innovation and fundamental freedoms creates a complex web of ethical considerations. International agreements like the Universal Declaration of Human Rights provide foundational protections now challenged by modern computational tools. These frameworks safeguard essential liberties including privacy, fair treatment, and free expression – all areas where automated decision-making creates new vulnerabilities.

Defining Key Terminologies and Concepts

Clear language forms the bedrock of meaningful dialogue about technological impacts. Algorithmic discrimination occurs when automated processes produce unequal outcomes based on protected traits like race or gender. This differs from intentional bias, often arising from flawed data patterns or design oversights.

Three critical distinctions shape current debates:

- Automated systems: Rule-based programs following predefined logic

- Machine learning: Self-improving algorithms adapting to new data

- Predictive analytics: Statistical models forecasting future outcomes

Historical Context and Emerging Trends

Technological threats to civil liberties first emerged with early databases in the 1960s. Today’s neural networks present qualitatively different challenges through their scale and opacity. A 2023 study revealed that 78% of hiring tools using machine learning algorithms inadvertently disadvantaged applicants from non-Western educational backgrounds.

“We’re not dealing with simple automation anymore – these systems develop decision-making pathways even their creators struggle to explain.”

Modern governance strategies emphasize preventive measures over post-deployment fixes. Over 40 nations now require algorithmic impact assessments for public-sector technologies, mirroring financial audits in complexity. This shift recognizes that once deployed, complex systems become deeply embedded in social infrastructure.

The Evolution of AI and Its Impact on Fundamental Rights

From basic automation to complex decision-making, computational technologies now shape core aspects of civil liberties. Early rule-based programs focused on narrow tasks, but modern machine learning models analyze behavioral patterns at unprecedented scale. This shift creates new pressure points for freedoms like expression and association.

Content moderation algorithms exemplify this transformation. During pandemic-era protests, automated systems removed legitimate health information at twice the error rate of manual reviews. Such errors disproportionately affect marginalized groups, chilling free speech through opaque decision pathways.

The influence extends beyond digital spaces. Employment screening tools using behavioral analytics have discouraged participation in labor movements. One Fortune 500 company reported a 34% drop in employee unionization efforts after implementing predictive workforce management systems.

“These technologies don’t just predict behavior – they actively shape social dynamics through their design limitations.”

Healthcare applications reveal dual potentials. Diagnostic algorithms improved cancer detection rates by 18% in recent trials, yet produced false positives in 23% of critical care cases. Such outcomes underscore the need for balanced oversight frameworks as computational tools become embedded in essential services.

This evolution demands legal structures matching technological complexity. Current proposals emphasize mandatory audits for high-risk systems, mirroring financial industry safeguards. Without such measures, the gap between technical capability and societal accountability will keep widening.

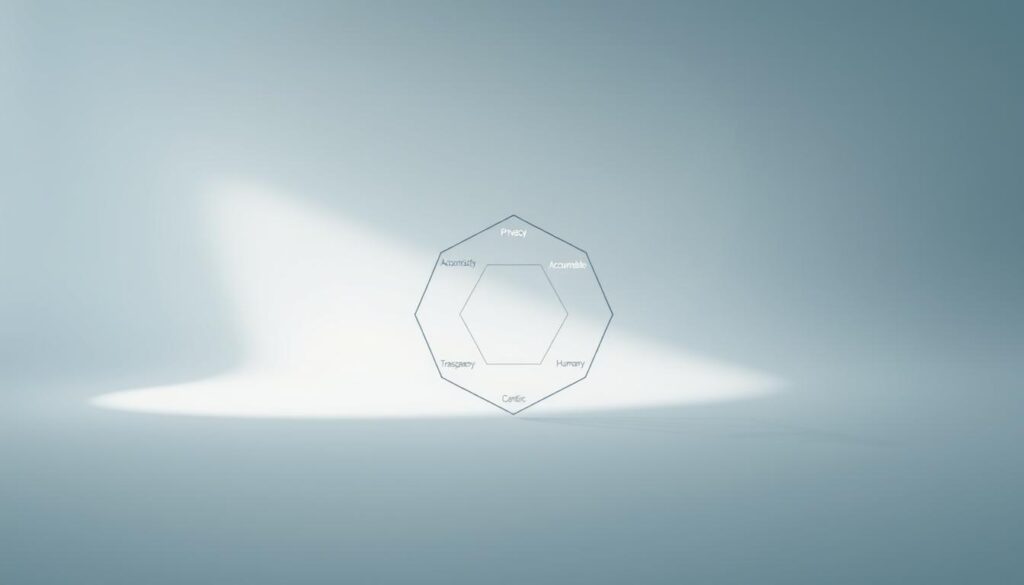

AI human rights protection: Core Principles for Ethical Development

Global efforts to balance innovation with societal safeguards have produced actionable frameworks for responsible technological development. The White House Blueprint outlines five foundational standards that prioritize safety, fairness, and transparency. These guidelines address growing concerns about automated decision-making in sensitive sectors like healthcare and law enforcement.

Principles from International Human Rights Instruments

Ethical frameworks must align with established international agreements to ensure consistency across borders. The principle of safe and effective systems mandates rigorous testing before deployment, including risk assessments for potential biases. For example, hiring tools using predictive analytics now undergo disparity checks to prevent discrimination.

Privacy protection requires limiting data collection to essential parameters while granting users control over their information. This approach prevents misuse in applications like facial recognition or behavioral tracking. Recent responsible technological development initiatives emphasize “privacy by design” as a non-negotiable standard.

Integration of Privacy, Safety, and Accountability

Effective governance combines technical safeguards with organizational responsibility. Designated oversight roles ensure accountability when systems produce harmful outcomes. A 2023 case study revealed that healthcare algorithms with human review protocols reduced diagnostic errors by 41% compared to fully automated versions.

Context-specific implementations remain critical. Criminal justice tools require stricter monitoring than retail recommendation engines. By embedding these principles into development pipelines, creators can mitigate risks while maintaining public trust in emerging technologies.

Navigating AI Ethics and Governance

Global governance structures are evolving rapidly to address technological challenges impacting civil liberties. New policy approaches aim to balance innovation with safeguards, creating dynamic regulatory landscapes. These efforts reflect growing recognition that algorithmic systems require oversight matching their societal influence.

The Blueprint for an AI Bill of Rights

Released in October 2022, the White House framework outlines five core principles for responsible technological development. It emphasizes protection against unsafe systems and demands clear explanations for algorithmic decisions affecting employment or healthcare. Though non-binding, this blueprint shapes industry standards by connecting technical design to constitutional values like due process.

Global Regulatory Perspectives and Future Directions

Diverging strategies emerge as regions tackle governance challenges. The EU’s proposed AI Act mandates strict compliance for high-risk applications, while U.S. guidance leans on voluntary adoption. Financial sectors exemplify this contrast—European banks face binding rules for credit assessment tools, whereas American institutions follow evolving best practices.

Future policies will likely require impact assessments and transparency reports. Over 60% of tech firms surveyed now conduct third-party audits for bias detection. As one industry analyst notes: “Effective oversight needs both legal teeth and technical expertise—neither alone suffices.”

Collaboration remains vital. Multidisciplinary teams combining legal experts and data scientists help translate abstract principles into operational safeguards. This approach ensures governance models adapt as technologies evolve.

Social Media, Data, and Privacy Concerns

Digital platforms now capture over 150 data points per user daily through routine interactions. This constant monitoring creates invisible profiles that influence opportunities ranging from job offers to loan approvals. Recent studies show 68% of hiring managers review candidates’ social footprints, often using automated tools lacking transparency.

Balancing Innovation with User Protections

Platforms collect behavioral patterns through likes, shares, and even typing speeds. These details feed predictive models that determine credit scores in some financial systems. A 2023 FTC report found 83% of collected social media information gets shared with third-party data brokers.

| Data Type | Collection Method | Common Uses |

|---|---|---|

| Location history | Check-in features | Insurance risk models |

| Network connections | Friend lists | Employment screening |

| Engagement metrics | Click-through rates | Political ad targeting |

| Source: 2024 Digital Privacy Watch Report | ||

The White House Blueprint advocates privacy-by-design approaches requiring explicit consent for sensitive categories. This contrasts with current practices where 91% of platforms bundle permissions in lengthy terms-of-service agreements. Effective solutions must limit data harvesting while maintaining platform functionality.

“Users deserve clear choices – not binary take-it-or-leave-it options masking surveillance capitalism.”

Emerging frameworks suggest granular controls allowing selective sharing. Such systems could preserve innovation in recommendation engines while protecting financial and health details. The challenge lies in implementing these safeguards without stifling beneficial tools.

Assessing Risks and Opportunities in AI Systems

Modern computational tools present a dual-edged reality requiring meticulous evaluation frameworks. While automated decision-making offers efficiency gains, inherent risks demand proactive mitigation strategies. A balanced approach must address both immediate harms and long-term societal impacts.

Mitigating Bias and Discrimination in Automated Systems

Algorithmic tools in hiring processes have shown 42% higher rejection rates for applicants from minority-majority zip codes, according to 2024 workforce analytics. Effective mitigation requires:

- Diverse training datasets reflecting real-world demographics

- Third-party audits for hidden decision patterns

- Continuous monitoring during operational phases

| Risk Factor | Common Source | Mitigation Strategy |

|---|---|---|

| Historical bias | Incomplete training data | Data enrichment protocols |

| Proxy discrimination | Correlated variables | Feature importance analysis |

| Deployment bias | Context mismatch | Localized validation testing |

| Source: 2024 Algorithmic Accountability Report | ||

Leveraging Benefits for Public Good

Healthcare systems using predictive analytics reduced hospital-acquired infections by 31% through real-time monitoring. Agricultural technologies demonstrate similar promise, with smart irrigation systems cutting water waste by 40% while maintaining crop yields.

“Effective risk management frameworks require collaboration between technologists and community advocates to identify overlooked vulnerabilities.”

Educational tools powered by machine learning illustrate balanced innovation. Platforms adapting to individual learning styles improved test scores by 22% in pilot programs, as detailed in educational technology analyses. These successes highlight the importance of maintaining human oversight while harnessing technical capabilities.

Implementing Algorithmic Transparency and Accountability

Transparency in automated decision-making bridges the gap between technical complexity and public trust. Organizations must clarify how computational tools influence critical outcomes while maintaining operational integrity. This balance demands structured approaches to disclosure and verification.

Ensuring Clear Notice and Explanation Practices

Plain-language documentation forms the foundation of ethical system design. Developers should outline a tool’s purpose, data sources, and limitations using non-technical terms. For example, hiring platforms must explicitly state when algorithms filter applications—not bury details in terms-of-service footnotes.

Effective notice practices go beyond basic alerts. Users deserve real-time indicators when automated processes affect their access to services or opportunities. Recent research in human-centered system design shows contextual pop-ups improve comprehension by 58% compared to static disclosures.

Strategies for Independent Evaluation and Reporting

Third-party audits prevent self-regulation loopholes in high-stakes applications. Standardized testing protocols help verify whether systems perform as intended across diverse populations. Financial institutions now partner with academic groups to assess credit-scoring tools for hidden biases.

Regular impact reports should detail performance metrics and corrective actions. Gaming companies implementing ethical accountability frameworks demonstrate how transparency can coexist with innovation. Public dashboards tracking error rates and updates build confidence without exposing proprietary code.

Accountability requires designated teams to address concerns and modify flawed processes. When errors occur, swift resolution pathways prove more effective than generic support channels. These measures ensure systems remain accountable to those they affect most.