What happens when groundbreaking innovation collides with deep-rooted human skepticism? As intelligent systems reshape workplaces, healthcare, and daily routines, 52% of Americans report greater concern than enthusiasm about these advancements—a tension that defines our technological moment.

Recent studies reveal a population both informed and apprehensive. While 90% of U.S. adults acknowledge familiarity with smart technologies, only one-third claim detailed understanding. This gap between awareness and comprehension fuels polarized perspectives: 44% predict net negative consequences from widespread adoption, while 38% anticipate overall benefits.

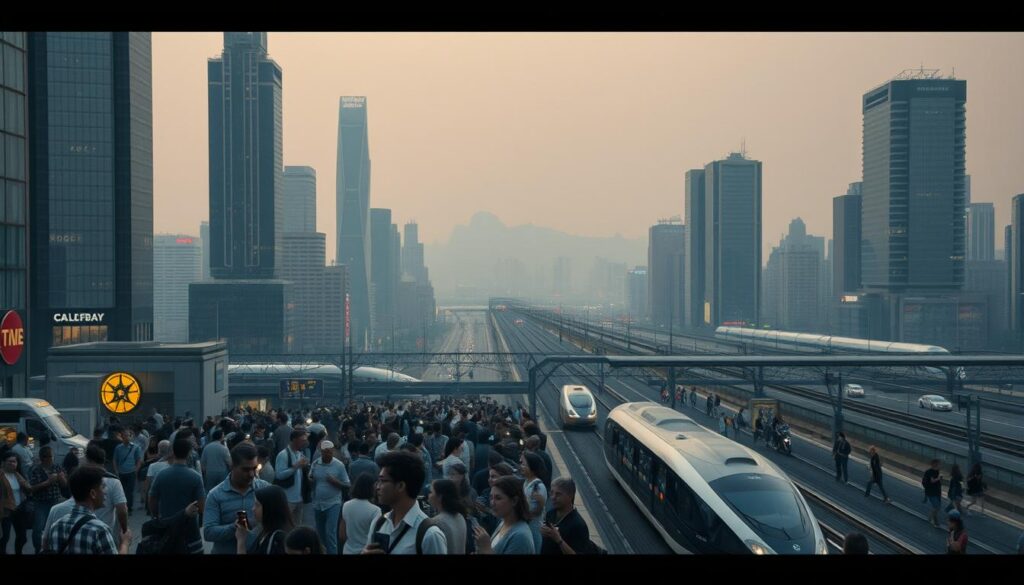

The complexity deepens when examining demographic patterns. Younger urban populations often demonstrate cautious optimism, contrasting with older rural groups showing heightened wariness. These divisions reflect broader societal debates about automation’s role in employment, data privacy, and human decision-making.

Major research institutions like Pew Research Center and Quinnipiac University employ sophisticated methodologies to track these evolving attitudes. Their findings illuminate how personal experiences with voice assistants, recommendation algorithms, and automated services shape collective perceptions of technological integration.

Key Takeaways

- Over half of Americans express more concern than excitement about smart technology integration

- High awareness (90%) contrasts with low detailed understanding (33%) of advanced systems

- 44% predict harmful societal impacts outweigh benefits in daily life applications

- Demographic differences reveal urban-rural and age-related perception gaps

- Ongoing ethical debates influence corporate strategies and regulatory discussions

Examining AI public opinion in Everyday Life

Modern life increasingly intersects with intelligent systems, yet recognition of their presence remains uneven. A 2022 national survey found just 30% of adults could identify six common smart technology applications. This gap between exposure and comprehension shapes how people interact with evolving tools.

Survey Data on Awareness and Understanding

Educational attainment strongly influences recognition of automated systems. College graduates demonstrate 2.3x greater accuracy in identifying chat-based interfaces compared to those without degrees. Gender disparities persist, with male respondents reporting 18% higher familiarity scores across age groups.

Generational Knowledge Patterns

Quinnipiac University research reveals stark divides: 67% of Gen Z respondents describe substantial smart tech knowledge versus 33% of Baby Boomers. The Silent Generation shows particular difficulty, with 89% reporting limited understanding of recommendation algorithms.

These findings highlight how demographic factors shape technological literacy. As systems become more embedded in daily routines, addressing these comprehension gaps grows increasingly urgent for equitable adoption.

Tracking Technological Impact on Society

From hospital corridors to classroom discussions, intelligent systems now influence decisions that shape human outcomes. This transformation sparks both optimism and hesitation, particularly in fields requiring specialized expertise.

Applications in Health and Education

Healthcare reveals a paradox: 38% believe advanced systems improve patient care, while 33% predict negative effects. Yet 65% would trust these tools for personal skin cancer screenings. “Diagnostic accuracy matters more than philosophical debates when lives are at stake,” notes a Johns Hopkins researcher.

In education, 67% of teens recognize ChatGPT—19% use it for assignments. Acceptance varies by task:

- 69% approve using it for research

- 39% support math problem-solving

- 57% reject essay writing assistance

Shifts in Daily Routines and Work

Workplace tools now automate tasks from scheduling to data analysis. While productivity gains average 14% in tech-driven sectors, 41% of employees worry about skill relevance. Evening routines increasingly involve smart home devices, with 58% of households using voice-activated systems weekly.

Educational institutions face new challenges balancing technology integration with core learning objectives. As adaptive learning platforms personalize curricula, 63% of teachers report improved engagement—but 48% express concerns about over-reliance on automated feedback.

Insights from Recent U.S. Surveys

Accurate measurement of technological attitudes requires precision-engineered methodologies. Leading institutions employ advanced sampling strategies to capture nuanced perspectives across America’s diverse population.

Methodologies and Sample Analysis

Quinnipiac University’s April 2023 poll surveyed 1,562 U.S. adults using dual contact methods: 47% landline and 53% mobile interviews. This approach ensures representation across communication preferences, with employed adults (867 respondents) analyzed separately for workplace insights.

| Institution | Sample Size | Contact Method | Key Feature |

|---|---|---|---|

| Quinnipiac | 1,562 adults | Live phone interviews | ±2.5% margin of error |

| Pew Research | Multi-survey aggregate | Mixed-mode design | Longitudinal tracking |

Random digit dialing eliminates selection bias, while probability sampling weights responses by demographic factors. For specialized analysis like crafting effective surveys, researchers balance sample sizes against practical constraints – larger groups reduce error margins but increase costs.

Pew’s approach combines multiple surveys to track attitude shifts over time. Their cross-referenced data reveals how exposure to emerging technologies correlates with acceptance rates across age groups and regions.

These methodologies demonstrate how modern research adapts to societal changes. By combining rigorous statistical standards with adaptive data collection, institutions maintain relevance in measuring evolving technological perceptions.

Public Attitudes Towards AI in the Workplace

The integration of advanced technologies into professional environments has sparked intense debates about workforce evolution. Nearly 1 in 5 U.S. workers hold positions highly exposed to automated systems, particularly in analytical roles requiring college degrees. This concentration creates distinct challenges for different worker groups.

Impact on Job Security and Skills

Most American adults (56%) anticipate reduced employment opportunities due to technological advancements. However, only 28% believe these changes will personally affect their jobs. This perception gap highlights a psychological phenomenon where people recognize systemic impacts while maintaining individual optimism.

Skill development patterns reveal educational divides:

- 55% of degree-holding workers actively learn new technical competencies

- 27% without degrees pursue similar training

Use of Automated Systems for Hiring and Monitoring

Two-thirds of job seekers avoid companies using intelligent tools in recruitment processes. Concerns about ethical challenges in decision-making drive this reluctance, despite potential efficiency gains in candidate screening.

| Monitoring Type | Employee Comfort Level |

|---|---|

| Productivity Tracking | 61% Acceptable |

| Personal Behavior Analysis | 34% Acceptable |

Workers show greater resistance to surveillance extending beyond work outputs. Companies implementing these systems must balance operational needs with privacy expectations to maintain workforce trust.

Evolving Trends in Artificial Intelligence Usage

Generative tools are reshaping how people interact with digital systems, creating distinct patterns of adoption across age groups and professions. Recent data shows 58% of U.S. adults recognize ChatGPT, yet only 18% actively use it—a gap highlighting the difference between awareness and practical application.

Adoption of Generative AI Tools

Usage rates reveal sharp generational divides. While 67% of teenagers know ChatGPT and 19% apply it to schoolwork, adults over 45 show 3x lower engagement. College graduates use these tools 2.6x more frequently than those without degrees, suggesting education impacts comfort with emerging technologies.

Practical applications dominate regular use:

- 37% leverage tools for research tasks

- 24% assist with professional projects

- 16% generate images for creative needs

Image creation represents a breakthrough in accessibility—users without design training now produce visual content through text prompts. “The ability to manifest ideas visually changes how we approach creative work,” observes a Stanford digital media researcher.

Teen adoption patterns raise questions about academic integrity. While 69% approve using generative tools for research assistance, most reject their use in essay writing. This selective acceptance indicates users evaluate ethical boundaries based on task complexity and perceived value.

Analyzing Health and Safety Concerns with AI

Medical innovations often spark both hope and hesitation. Six-in-ten U.S. adults express discomfort with healthcare providers using advanced systems for treatment decisions. Yet 65% would embrace these tools for skin cancer screenings—a paradox showing context-dependent trust.

Privacy and Data Security Issues

Three-quarters of Americans fear rushed implementation in medical settings. This concern stems from data vulnerabilities: 82% believe personal health information could be misused through technological systems. “Security breaches aren’t hypothetical—they directly impact patient outcomes,” states a cybersecurity analyst from Johns Hopkins.

Trust in automated medical advice remains low. Only 22% of people report regular confidence in system-generated health information:

- 4% trust recommendations “almost always”

- 18% accept them “most times”

- 51% approve “only sometimes”

These attitudes highlight critical challenges for healthcare technology integration. Adults particularly worry about insurance companies accessing sensitive data through third-party platforms. Recent proposals suggest encrypted data storage and strict access controls could rebuild public confidence.

Ongoing debates focus on balancing innovation speed with safety protocols. While 58% acknowledge potential diagnostic benefits, 63% demand extended testing periods before widespread clinical adoption. This cautious approach reflects broader societal priorities—placing patient security above technological novelty.

The Role of Government and Regulation in AI

Balancing innovation with accountability emerges as a critical challenge for modern governance. Recent surveys reveal 67% of tech-aware citizens worry more about insufficient oversight than excessive regulation. This preference for proactive measures reflects growing demands for ethical frameworks in system development.

Calls for Transparency in Tech Practices

Three-quarters of Americans demand clearer disclosures from businesses using advanced tools. A 2023 study shows 73% believe companies withhold critical details about automated decision-making processes. “Transparency builds trust,” notes a Georgetown policy analyst. “When people understand how systems impact daily choices, confidence in their use grows.”

Oversight and the Future of Regulatory Approaches

Public expectations for specialized testing standards reveal nuanced views on safety. Driverless vehicle regulations exemplify this trend—87% support stricter requirements than traditional cars. Lawmakers now face pressure to create adaptive policies addressing both current technology applications and future innovations.

While 69% criticize current government efforts as inadequate, bipartisan proposals suggest new ways to strengthen oversight. These discussions emphasize collaborative models where regulators work with developers to assess societal impacts before widespread deployment. The path forward requires balancing protective measures with support for beneficial technological progress.