Anthropic unveiled Claude 3.5 Sonnet on Thursday, claiming it surpasses its previous AI models and OpenAI’s newly introduced GPT-4 Omni on multiple metrics. The company also introduced Artifacts, a new interactive workspace within Claude where users can modify Claude’s AI projects, like a playable crab video game.

“Our goal with Claude isn’t to create an incrementally better LLM but to develop an AI system that can work alongside people and software in meaningful ways,” mentioned Anthropic co-founder and CEO Dario Amodei in a press release. “Features like Artifacts are early experiments in this direction.”

This marks the initial release of Anthropic’s Claude 3.5 model series, following the launch of its Claude 3 models three months ago. Anthropic is striving to keep pace with OpenAI’s product releases. While today sees the introduction of the 3.5 version of the Sonnet mid-tier model, Anthropic plans to unveil 3.5 versions of Haiku (entry-level) and Opus (most advanced) later this year, along with exploring features like web search and memory for upcoming releases.

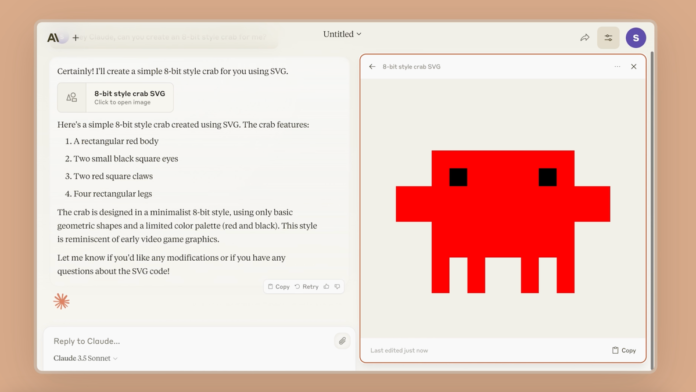

Anthropic aims to not only release superior models but also enhance features and capabilities to make their AI more practical. With Artifacts, users can develop interactive projects within Claude. In a demo, the startup exhibits how users can create characters and icons in an AI-generated 8-bit crab video game and make modifications as they progress.

Claimed to be its most powerful vision model yet, Claude 3.5 Sonnet is said to excel in visual reasoning, chart interpretation, and text transcription from flawed images. Anthropic asserts that Claude 3.5 Sonnet outperforms numerous visual capabilities of GPT-4 Omni, particularly in understanding charts, documents, and math. Additionally, Anthropic states that its new Claude surpasses OpenAI’s ChatGPT in coding and reasoning.

Notably, Anthropic states that Claude 3.5 Sonnet has improved at discerning which questions to answer and which to avoid. AI chatbots are often criticized for dodging certain queries. Gizmodo delved into AI censorship a few months ago, discovering that Anthropic’s Claude evaded many questions. With Claude 3.5, Anthropic expresses confidence in Claude’s ability to tackle inappropriate or potentially harmful questions.