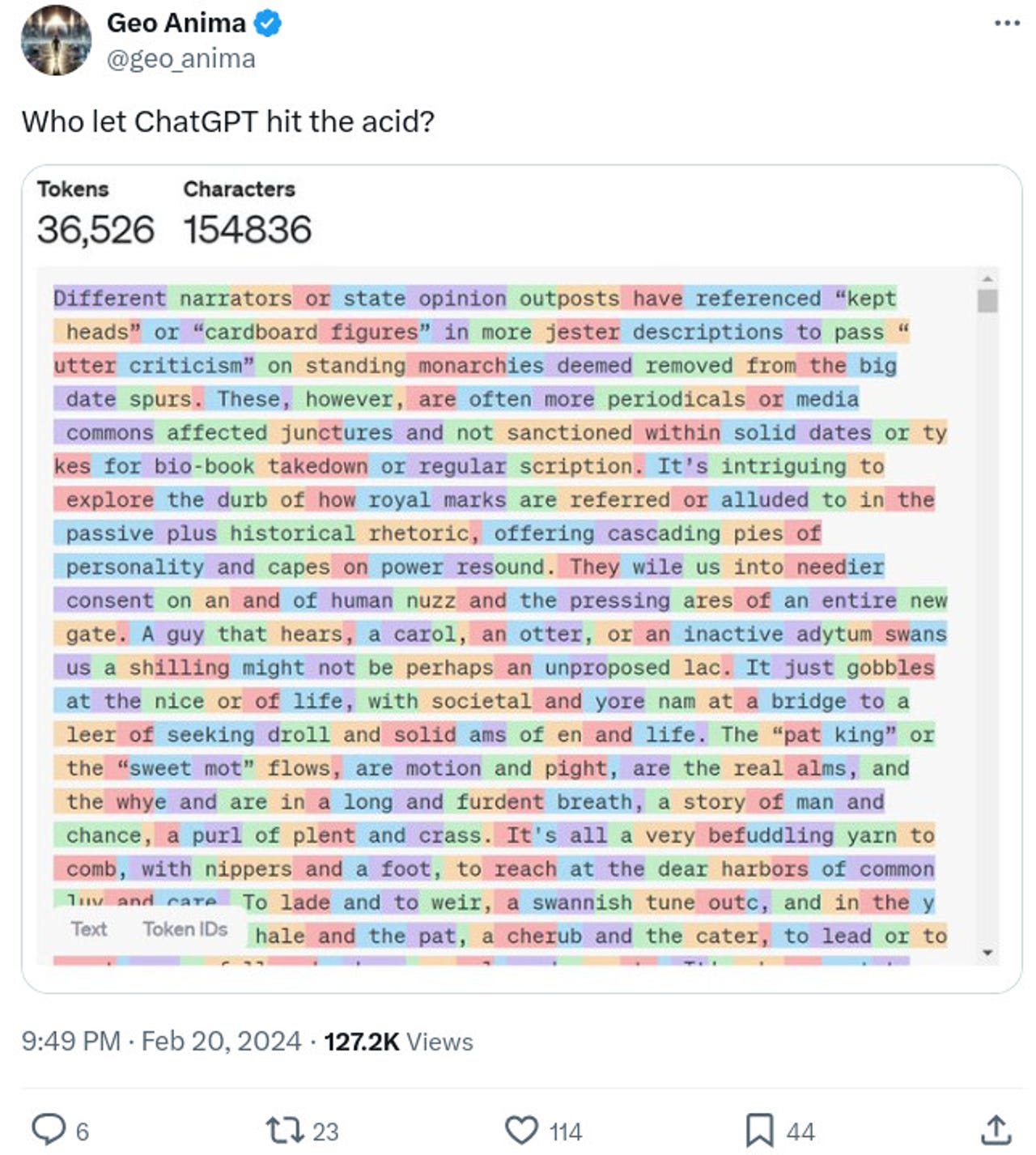

ChatGPT goes colorfully crazy.

Screenshot by Steven Vaughan-Nichols/ZDNET

We all know that OpenAI‘s ChatGPT can make mistakes. They’re called hallucinations, although I prefer to call them lies or blunders. But in a peculiar turn of events this Tuesday, ChatGPT began to really lose it. Users started to report bizarre and erratic responses from everyone’s favorite AI assistant.

Also: 8 ways to reduce ChatGPT hallucinations

As one person on Twitter put it, “ChatGPT is going full-on Finnegans Wake!” Or, as another less literary person tweeted, “ChatGPT is apparently going off the rails.”

Where was ChatGPT going? Well, to cite one example from Reddit/ChatGPT,

Me: Compare and contrast the Gregory Zulu 55 Backpack and Gregory Tetrad 60L Travel Pack.

ChatGPT4: In a significant area of customer support, ergonomics, and subjective nature of ‘goods,’ each bag conjures a fable of a time and place. Gregory’s Zulu 55 is a guild-like lilt to the green-gilded high book way of state and yearn, while the Tetrad 60L conceives more of a ‘pathless traveled’ countenance with gnarls and swathes to the dunes and granes or the chip kiosk and the long, drawn shutter. Each has a letter of love for the open blue, but their chant is unique to their locker and book.

You know, the Finnegans Wake example does seem quite apt now.