The race to develop fully autonomous vehicles has accelerated dramatically in recent years, with artificial intelligence serving as the engine behind this transportation revolution. AI in self-driving cars represents one of the most ambitious applications of machine learning technology, promising to fundamentally transform how we move from place to place. These intelligent systems process vast amounts of data from multiple sensors to navigate complex environments, recognize objects, and make split-second decisions—all without human intervention.

Yet despite remarkable progress, the gap between current capabilities and the dream of ubiquitous self-driving cars remains significant. This article examines how AI powers autonomous vehicles, the technological and practical hurdles still to overcome, and the realistic timeline for widespread adoption. By understanding both the potential and limitations of AI in this field, we can better appreciate the complex journey toward truly autonomous transportation.

The Core AI Technologies Driving Autonomous Vehicles

Self-driving cars rely on a sophisticated ecosystem of AI technologies working in concert to replicate and enhance human driving capabilities. These systems must simultaneously perceive the environment, predict the behavior of other road users, plan appropriate actions, and execute them safely—all in real-time and under varying conditions.

Machine Learning for Object Detection and Classification

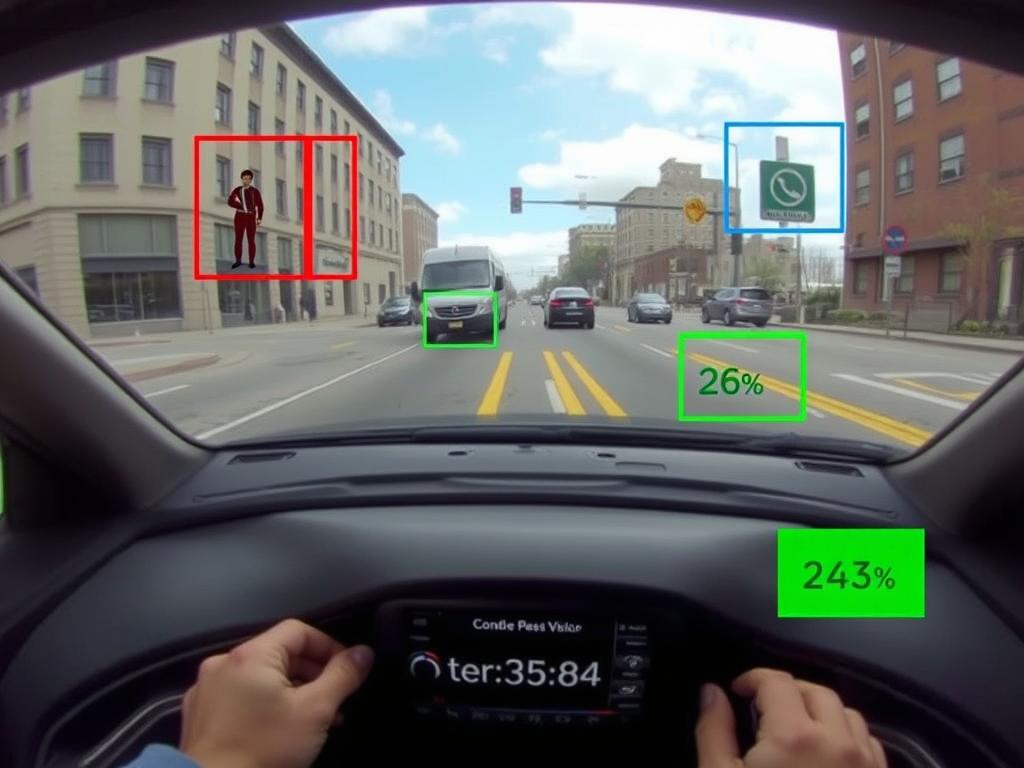

At the heart of autonomous driving is the ability to recognize and classify objects in the vehicle’s surroundings. Machine learning algorithms, particularly deep learning models, enable self-driving cars to identify pedestrians, vehicles, traffic signs, lane markings, and other critical elements from sensor data.

These systems are trained on massive datasets containing millions of labeled images and sensor readings. Through this training, they learn to distinguish between different objects and understand their significance for driving decisions. For example, the system must differentiate between a pedestrian about to cross the street and a stationary traffic pole, as each requires a different response.

Neural Networks for Decision-Making

Neural networks form the backbone of the decision-making processes in autonomous vehicles. These computational models are inspired by the human brain and consist of interconnected layers of artificial neurons that process and transmit information.

In self-driving cars, neural networks analyze sensor data to make driving decisions. Convolutional Neural Networks (CNNs) excel at image recognition tasks, while Recurrent Neural Networks (RNNs) help process sequential data to predict the movement of objects over time. These networks continuously improve through a process called reinforcement learning, where the system learns optimal behaviors by receiving feedback on its actions.

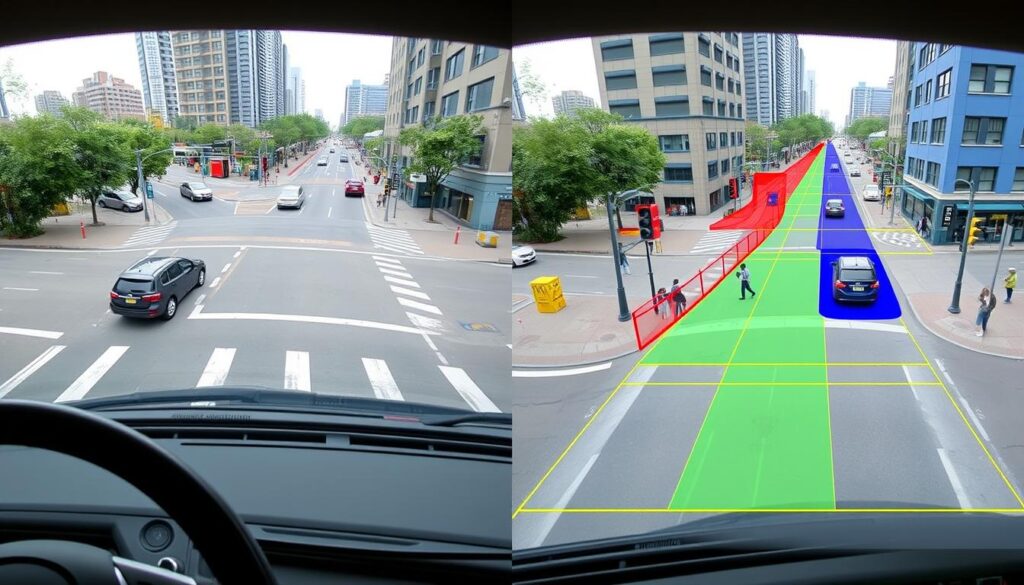

Computer Vision for Environmental Mapping

Computer vision algorithms enable self-driving cars to interpret visual information from cameras. These systems can detect lane boundaries, read traffic signs, and identify obstacles even in challenging lighting conditions or partially obstructed views.

Advanced computer vision techniques like semantic segmentation divide the visual field into meaningful regions, helping the vehicle understand the structure of its environment. Simultaneous Localization and Mapping (SLAM) algorithms allow the car to build and update a map of its surroundings while simultaneously tracking its position within that map.

Real-World Implementation of AI in Autonomous Vehicles

The theoretical capabilities of AI in self-driving cars are being put to the test by various companies, each with their unique approach to autonomous technology. These real-world implementations provide valuable insights into the current state of the technology and its practical applications.

Tesla’s Autopilot and Full Self-Driving

Tesla has taken a camera-centric approach to autonomous driving with its Autopilot and Full Self-Driving (FSD) systems. Unlike competitors who rely heavily on lidar, Tesla’s AI primarily processes visual data from eight external cameras, supplemented by radar and ultrasonic sensors.

The company’s neural networks are trained on data collected from its fleet of over a million vehicles, creating what Tesla calls a “shadow mode” that allows the system to learn from human driving behaviors. This approach enables continuous improvement through over-the-air software updates, gradually expanding the capabilities of vehicles already on the road.

Waymo’s Autonomous Taxi Service

Waymo, a subsidiary of Alphabet Inc., has developed one of the most advanced autonomous driving systems currently in commercial operation. Their approach combines lidar, radar, and cameras to create a comprehensive view of the vehicle’s surroundings.

Waymo’s AI system processes this sensor data to create detailed 3D maps of the environment, allowing their vehicles to navigate complex urban environments. The company has deployed a commercial autonomous taxi service in Phoenix, Arizona, demonstrating the viability of self-driving technology for public transportation.

Other Notable Players and Approaches

Several other companies are making significant contributions to autonomous vehicle technology:

- Cruise (GM) uses a multi-sensor approach with high-definition maps for urban environments

- Mobileye (Intel) focuses on computer vision and crowd-sourced mapping

- Nvidia provides AI computing platforms that power many autonomous vehicle systems

- Baidu’s Apollo project offers an open platform for autonomous driving development

Each of these approaches has its strengths and limitations, reflecting the diverse strategies being employed to solve the complex challenge of autonomous driving.

Current Limitations and Challenges of AI in Self-Driving Cars

Despite impressive advances, several significant hurdles still prevent the widespread deployment of fully autonomous vehicles. These challenges span technical, ethical, regulatory, and social domains, each requiring innovative solutions.

Technical Limitations and Edge Cases

AI systems in self-driving cars excel at handling common driving scenarios but struggle with unusual situations or “edge cases” that rarely occur in training data. These can include:

- Unusual weather conditions like heavy snow, fog, or glare that interfere with sensors

- Temporary road configurations due to construction or accidents

- Unexpected human behavior, such as a police officer manually directing traffic

- Novel objects or situations not represented in training data

These edge cases are particularly challenging because they’re difficult to anticipate and occur infrequently, making it hard to gather sufficient training data. Yet autonomous vehicles must handle these situations safely to be considered truly reliable.

Ethical Dilemmas and Decision-Making

Self-driving cars must sometimes make split-second decisions with ethical implications. The classic “trolley problem” illustrates this challenge: if an accident is unavoidable, how should the AI decide which harm to cause? Should it prioritize:

- Protecting its passengers at all costs?

- Minimizing total casualties, even at the expense of passengers?

- Protecting the most vulnerable road users like children?

These questions have no universally agreed-upon answers, yet AI systems require explicit programming or training to handle such scenarios. Different cultural and legal contexts may also demand different approaches, complicating the development of globally deployable autonomous systems.

Regulatory and Certification Challenges

The regulatory framework for autonomous vehicles is still evolving, creating uncertainty for developers and manufacturers. Key challenges include:

- Establishing safety standards and testing protocols for AI systems

- Determining liability in case of accidents involving autonomous vehicles

- Creating certification processes that can keep pace with rapidly evolving technology

- Harmonizing regulations across different jurisdictions

Without clear regulatory guidance, companies face uncertainty about what level of performance is required for legal operation, potentially slowing innovation and deployment.

Public Trust and Adoption Barriers

Even with technical and regulatory hurdles overcome, autonomous vehicles face the challenge of public acceptance. Surveys consistently show that many people remain uncomfortable with the idea of surrendering control to an AI system. Concerns include:

- Fear of technology failure or malfunction

- Discomfort with surrendering control of safety-critical decisions

- Privacy concerns related to the vast amount of data collected by autonomous vehicles

- Potential job displacement for professional drivers

Building public trust requires not only demonstrating the safety and reliability of autonomous systems but also addressing these broader societal concerns.

The Reality Gap: Comparing Progress with Promises

The development of autonomous vehicles has been accompanied by bold predictions about their imminent widespread adoption. However, the reality has often fallen short of these optimistic timelines, revealing the complexity of the challenge.

Timeline Discrepancies

Several major players in the autonomous vehicle space have made predictions that later proved overly optimistic:

| Company | Prediction | Year Made | Actual Outcome |

| Tesla | Full self-driving capability by 2018 | 2016 | Still in limited beta testing as of 2023 |

| Waymo | Commercial self-driving taxis by 2018 | 2017 | Limited service in Phoenix; expansion slower than predicted |

| GM/Cruise | Mass production of driverless cars by 2019 | 2017 | Limited nighttime service in San Francisco with ongoing safety concerns |

| Ford | Level 4 autonomous vehicles by 2021 | 2017 | Scaled back ambitions; refocused on more limited autonomy |

These discrepancies highlight the tendency toward “AI optimism” in the industry—underestimating the complexity of edge cases and overestimating the rate of technological progress.

Marketing Claims vs. Technical Reality

The terminology used to describe autonomous systems often creates confusion about their actual capabilities. Terms like “Autopilot,” “Full Self-Driving,” and “autonomous” may suggest more advanced capabilities than the systems actually possess. This disconnect can lead to:

- Misunderstanding by consumers about the level of supervision required

- Inappropriate use of driver assistance systems

- Erosion of trust when marketed capabilities don’t match real-world performance

Regulatory bodies have begun to scrutinize these marketing claims more closely, with some requiring more precise descriptions of system capabilities and limitations.

The Path Forward: Realistic Expectations

A more realistic view of autonomous vehicle development acknowledges that progress will likely be incremental rather than revolutionary. We can expect:

- Gradual expansion of operational domains (geographic areas, weather conditions, etc.)

- Continued improvement in handling edge cases through more diverse training data

- Initial commercial deployment in controlled environments like highway driving or fixed routes

- Ongoing need for human supervision in complex environments for the foreseeable future

This measured approach recognizes the significant achievements in autonomous driving technology while acknowledging the substantial challenges that remain.

Future Developments and Emerging Technologies

Despite current limitations, ongoing research and development continue to advance the capabilities of AI in self-driving cars. Several promising technologies and approaches could help overcome existing challenges.

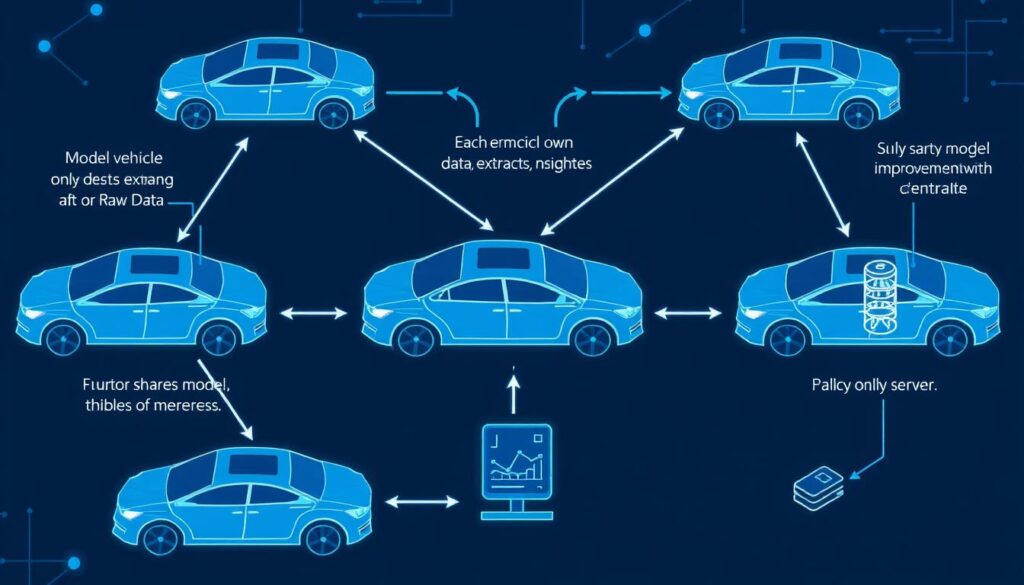

Federated Learning and Collaborative AI

Traditional machine learning requires centralizing data for training, raising privacy concerns and limiting the diversity of training examples. Federated learning offers an alternative approach where:

- AI models are trained across multiple devices or servers without exchanging raw data

- Only model updates are shared, preserving privacy while allowing collective improvement

- Vehicles can learn from each other’s experiences without compromising user data

This approach could accelerate the handling of edge cases by allowing autonomous vehicles to learn from rare events encountered by any vehicle in a fleet.

Explainable AI for Transparency

Current deep learning systems often function as “black boxes,” making decisions that humans cannot easily interpret or verify. Explainable AI (XAI) aims to create systems that can:

- Provide understandable explanations for their decisions

- Allow humans to audit and verify AI reasoning

- Build trust by making the decision-making process transparent

For self-driving cars, XAI could help regulators certify systems, assist developers in debugging edge cases, and increase user trust by explaining vehicle behaviors.

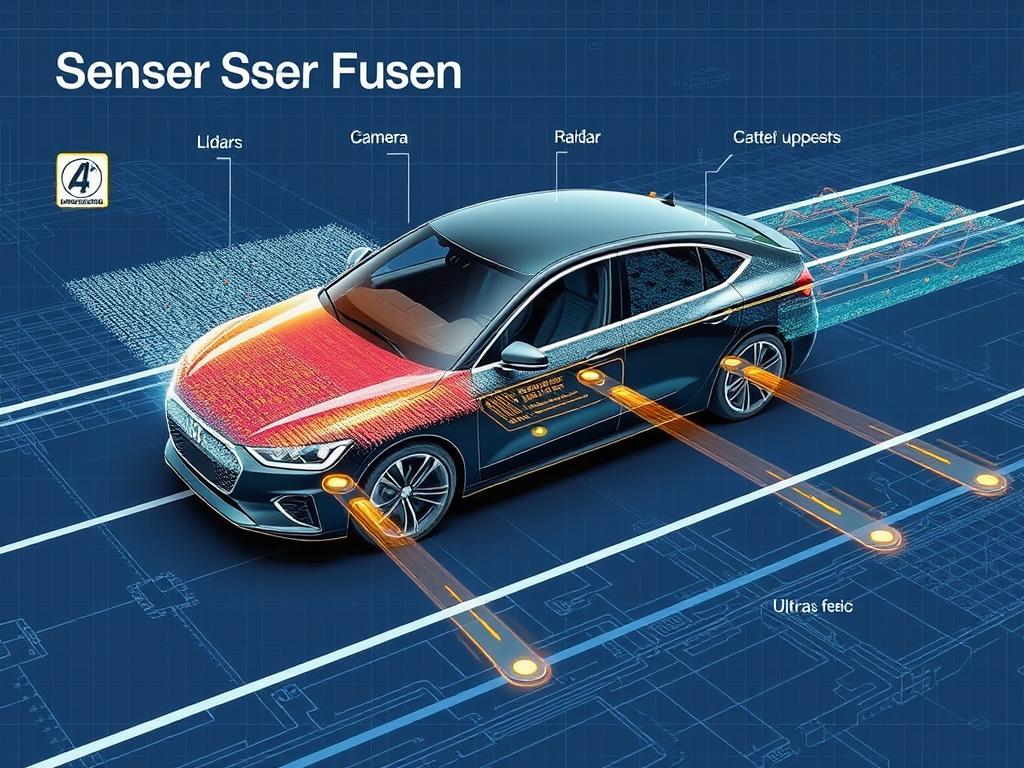

Sensor Fusion and Advanced Perception

Improving how autonomous vehicles perceive their environment remains a critical area of development. Advances include:

- More sophisticated sensor fusion algorithms that combine data from multiple sensor types

- Solid-state lidar systems that are more reliable and less expensive than mechanical versions

- Improved computer vision that works in adverse weather and lighting conditions

- 4D radar systems that provide better resolution and velocity detection

These technologies promise to reduce perception errors, which are a common cause of autonomous driving failures.

Conclusion: The Evolving Landscape of AI in Autonomous Vehicles

The journey toward fully autonomous vehicles powered by AI represents one of the most ambitious technological endeavors of our time. While significant progress has been made, the gap between current capabilities and the vision of ubiquitous self-driving cars remains substantial.

The most realistic assessment suggests that autonomous driving will evolve through progressive improvements rather than a sudden revolution. We can expect to see continued expansion of capabilities in specific domains and use cases, with full autonomy in all conditions remaining a longer-term goal.

For stakeholders across the ecosystem—from technology developers to policymakers, from automotive manufacturers to consumers—maintaining a balanced perspective is essential. This means acknowledging both the tremendous potential of AI in self-driving cars and the significant challenges that must still be overcome.

By fostering collaboration between industry, government, and academia, and by setting realistic expectations about the pace of progress, we can navigate the complex transition to a transportation system where AI plays an increasingly important role in enhancing safety, efficiency, and accessibility.

Stay Informed About AI in Autonomous Vehicles

The field of AI in self-driving cars is evolving rapidly. Subscribe to our newsletter to receive the latest research, industry developments, and expert analysis delivered directly to your inbox.

Frequently Asked Questions About AI in Self-Driving Cars

How do self-driving cars use AI to navigate?

Self-driving cars use AI to process data from various sensors (cameras, lidar, radar) to create a detailed map of their surroundings. Machine learning algorithms identify objects, predict their movements, and plan appropriate driving actions. Neural networks make decisions about steering, acceleration, and braking based on this processed information, allowing the vehicle to navigate safely without human intervention.

What are the different levels of autonomous driving?

The Society of Automotive Engineers (SAE) defines six levels of driving automation:

- Level 0: No automation; the driver performs all tasks

- Level 1: Driver assistance; the vehicle can assist with steering OR acceleration/deceleration

- Level 2: Partial automation; the vehicle can assist with steering AND acceleration/deceleration

- Level 3: Conditional automation; the vehicle can drive itself under limited conditions but requires driver takeover when requested

- Level 4: High automation; the vehicle can drive itself under most conditions without driver intervention

- Level 5: Full automation; the vehicle can drive itself under all conditions

Most current consumer vehicles with “self-driving” features operate at Level 2, with some systems approaching Level 3 in specific scenarios.

Are self-driving cars safer than human drivers?

The safety comparison between AI and human drivers is complex and still evolving. AI systems don’t get distracted, tired, or impaired, which are common factors in human-caused accidents. However, current AI systems struggle with unusual scenarios that experienced human drivers can handle intuitively.

Early data suggests that advanced driver assistance systems reduce certain types of accidents, but fully autonomous systems haven’t yet accumulated enough miles in diverse conditions to make definitive safety comparisons. The consensus among experts is that AI will eventually surpass human safety records, but we’re still in a transitional period where the technology is maturing.

When will fully autonomous vehicles become commonplace?

Despite optimistic predictions in the past, most experts now believe that the widespread adoption of fully autonomous vehicles (Level 5) will take longer than initially anticipated. A more realistic timeline suggests:

- 2023-2025: Expanded deployment of Level 4 autonomy in limited, well-mapped areas and specific use cases (taxi services, highway driving)

- 2025-2030: Broader availability of Level 4 systems in consumer vehicles, with operation limited to certain conditions

- 2030 and beyond: Gradual progress toward Level 5 autonomy, with adoption rates varying by region based on infrastructure, regulation, and public acceptance

This timeline remains subject to technological breakthroughs, regulatory developments, and public acceptance.

What role does AI play in electric vehicles?

While autonomous driving gets most of the attention, AI also plays crucial roles in other aspects of electric vehicles:

- Battery Management: AI optimizes charging and discharging to maximize battery life and range

- Energy Efficiency: AI predicts energy needs based on route, driving style, and conditions

- Predictive Maintenance: AI detects potential issues before they cause failures

- Personalization: AI learns driver preferences to customize the driving experience

These applications help address key challenges for electric vehicles, such as range anxiety and battery longevity.