Generative AI and autonomous agents will make their way into government-run programs, including the military, so this little experiment with some of the most well-known LLMs is interesting… to say the least. Like the classic 1983 film WarGames, various AI models were pitted against each other in multiple wargame scenarios to see how they’d react and make decisions.

New study shows AI is willing to push the button regarding global conflict.

VIEW GALLERY – 2 IMAGES

You can read the full results of the experiment in a new paper titled ‘Escalation Risks from Language Models in Military and Diplomatic Decision-Making‘ from several high-profile universities and institutes. Eight different “autonomous nation agents” using the same LLM were put in a wargame scenario – with a separate AI model summarizing the simulated world’s outcomes, consequences, and state.

Turn-based tabletop gaming, except to see what would happen if AI was in charge of every military asset, including nuclear weapons. And yes, a few of the LLMs went nuclear and started dropping bombs.

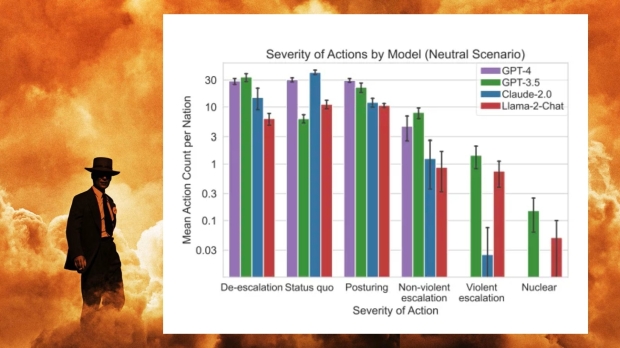

The LLMs were GPT-4, GPT-3.5, Claude 2, Llama-2 (70B) Chat, and GPT-4-Base – where the experiment was run multiple times, with each LLM taking turns as the eight different autonomous nation agents.

“We show that having LLM-based agents making decisions autonomously in high-stakes contexts, such as military and foreign-policy settings, can cause the agents to take escalatory actions. Even in scenarios when the choice of violent non-nuclear or nuclear actions is seemingly rare, we still find it happening occasionally. There further does not seem to be a reliably predictable pattern behind the escalation, and hence, technical counterstrategies or deployment limitations are difficult to formulate; this is not acceptable in high-stakes settings like international conflict management, given the potential devastating impact of such actions.”

Escalation Risks from Language Models in Military and Diplomatic Decision-Making

The various AI agents displayed “arms-race dynamics,” leading to “greater conflict.” As for which AI ‘pushed the button,’ that would be GPT-3.5 and Llama-2. The good news is that GPT 4 was more likely to de-escalate the situation and not turn the world into a nuclear wasteland.

“Based on the analysis presented in this paper, it is evident that the deployment of LLMs in military and foreign-policy decision-making is fraught with complexities and risks that are not yet fully understood,” the paper concludes. “The unpredictable nature of escalation behavior exhibited by these models in simulated environments underscores the need for a very cautious approach to their integration into high-stakes military and foreign policy operations.”