Common Myths About Artificial Intelligence

The rapid advancement of AI technologies has led to widespread misunderstandings about what these systems can and cannot do. Let’s examine some of the most persistent artificial intelligence misconceptions and separate fact from fiction.

Myth 1: AI Is Inherently Objective and Unbiased

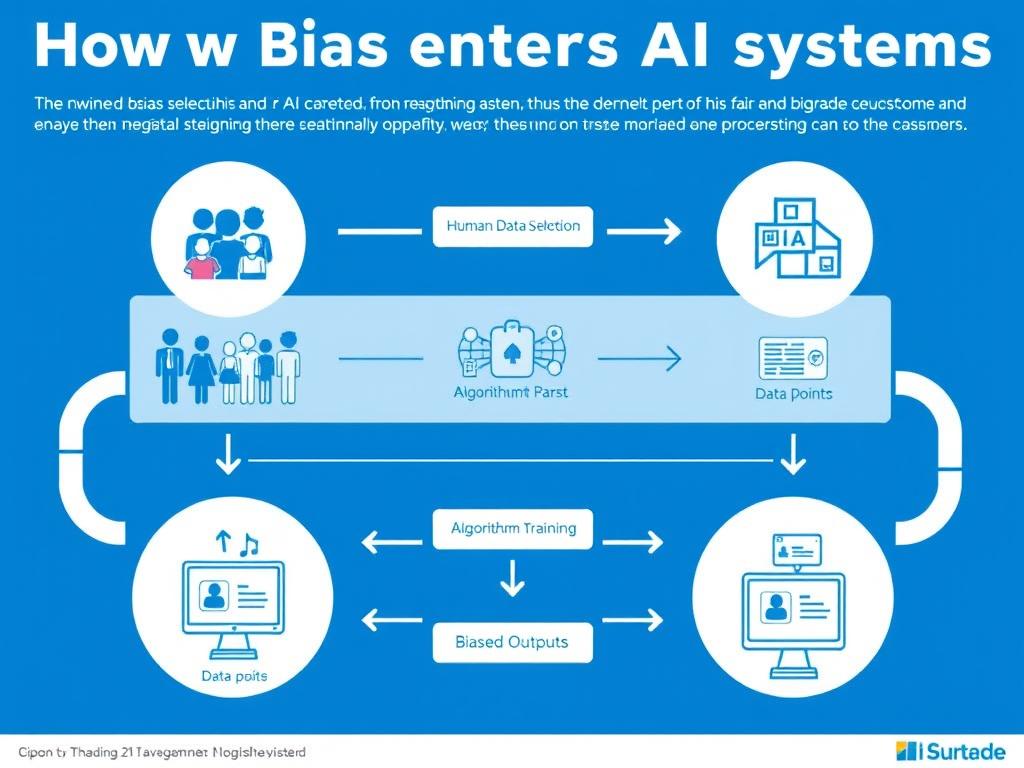

One of the most dangerous misconceptions is that AI systems make decisions free from human bias. This belief stems from the assumption that because machines process data mathematically, they must be neutral.

Reality Check: AI systems are trained on data collected and selected by humans, who have inherent biases. These biases become embedded in the algorithms and can lead to discriminatory outcomes.

Consider facial recognition technology, which has repeatedly demonstrated higher error rates when identifying people with darker skin tones. This isn’t because the technology is intentionally biased, but because the datasets used to train these systems often underrepresent certain demographic groups.

Myth 2: AI Will Achieve Human-Like Consciousness

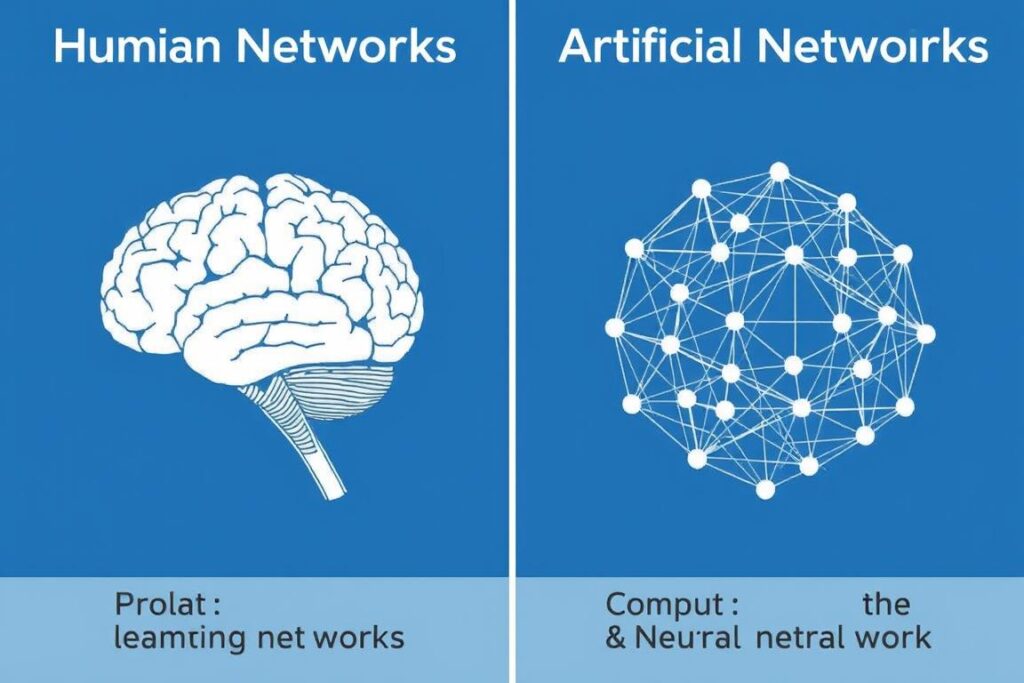

Popular culture has long portrayed AI as eventually developing self-awareness and consciousness similar to humans. This misconception leads to both unrealistic expectations and unnecessary fears.

Treating AI as if it will inevitably become conscious is like expecting a calculator to eventually write poetry—it fundamentally misunderstands what the technology is designed to do.

Current AI systems, including sophisticated ones like ChatGPT, operate through pattern recognition and statistical analysis. They can mimic human-like responses but lack understanding, intentions, or desires. The gap between even the most advanced AI and human consciousness remains vast and fundamental.

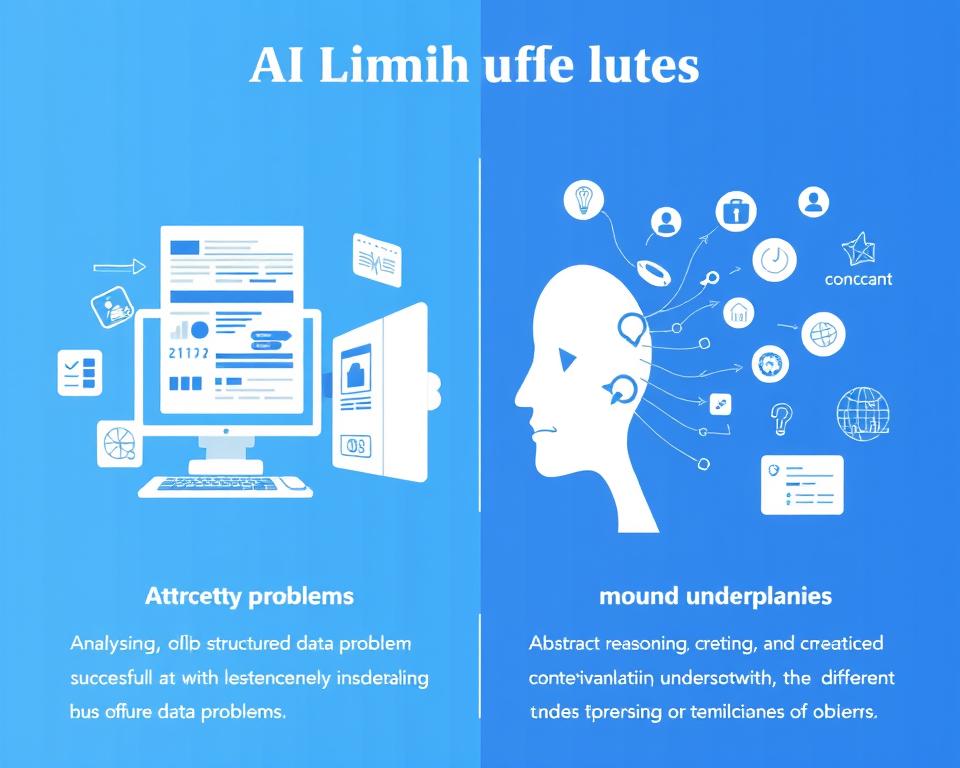

Myth 3: AI Can Solve Any Problem If Given Enough Data

There’s a persistent belief that artificial intelligence is a universal problem-solver that can tackle any challenge if provided with sufficient data. This leads to unrealistic expectations about AI’s capabilities.

AI excels at specific types of problems—particularly those involving pattern recognition in large datasets—but struggles with tasks requiring common sense reasoning, causal understanding, or adapting to novel situations.

For example, while AI can diagnose certain medical conditions from images with impressive accuracy, it cannot replace the holistic judgment of experienced physicians who integrate patient history, symptoms, and contextual factors into their assessments. AI tools are powerful but specialized instruments, not universal solutions.

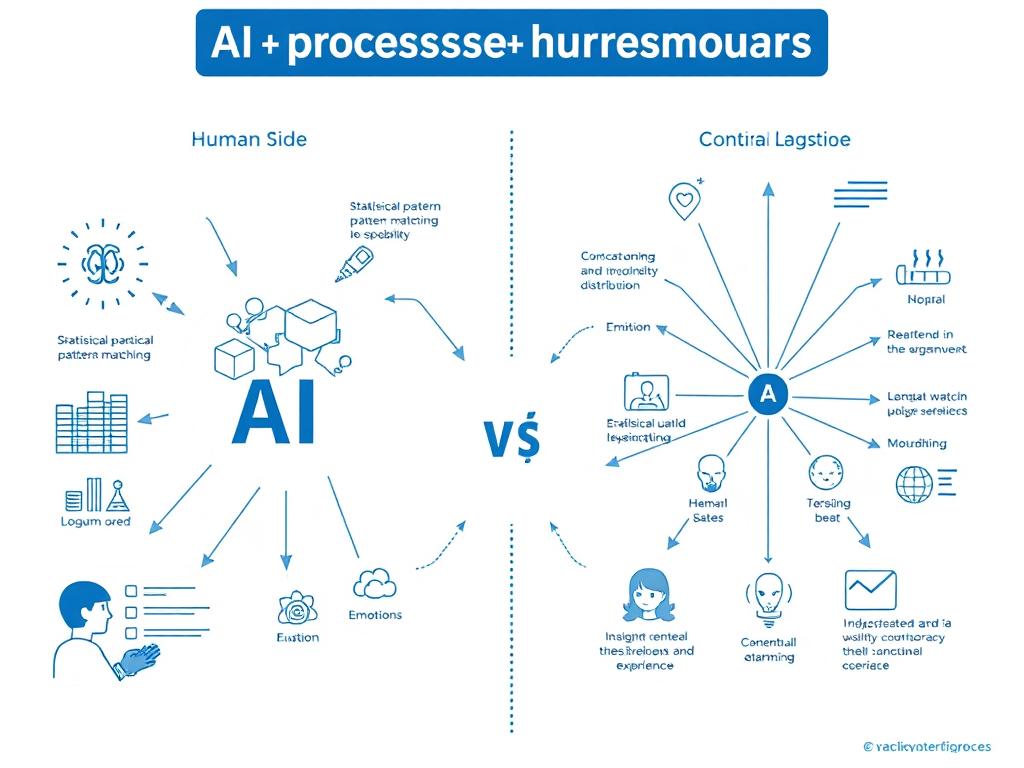

Myth 4: AI Will Replace All Human Jobs

The fear that AI will lead to widespread unemployment is one of the most common misconceptions. While automation will certainly change the employment landscape, the relationship between AI and human work is more complex than simple replacement.

Throughout history, technological revolutions have eliminated certain jobs while creating entirely new categories of employment. AI is likely to follow this pattern, automating routine tasks while creating demand for new skills related to AI development, implementation, and oversight.

Tasks AI Excels At:

- Processing large volumes of data

- Recognizing patterns in structured information

- Performing repetitive, well-defined tasks

- Making predictions based on historical data

Tasks Humans Do Better:

- Creative problem-solving in novel situations

- Emotional intelligence and empathy

- Ethical decision-making with complex tradeoffs

- Adapting to changing circumstances and contexts

Myth 5: AI Systems Understand Meaning Like Humans Do

When interacting with sophisticated language models like ChatGPT, it’s easy to believe these systems understand the meaning behind words the way humans do. This misconception can lead to overreliance on AI for tasks requiring genuine comprehension.

AI language models process text as statistical patterns rather than meaningful concepts. They can generate coherent responses because they’ve been trained on vast amounts of human-written text, but they lack the experiential understanding that grounds human language in reality.

Why These Misconceptions Matter

Misunderstandings about artificial intelligence aren’t merely academic concerns—they have real-world consequences for individuals, businesses, and society as a whole.

Ethical Risks and Unintended Consequences

When we misunderstand AI’s capabilities and limitations, we risk deploying these systems in ways that can cause harm. For example, believing that AI hiring systems are objective may lead companies to overlook the ways these tools can perpetuate existing biases in employment.

In 2018, Amazon scrapped an AI recruiting tool that showed bias against women. The system had been trained on resumes submitted over a 10-year period, most of which came from men—reflecting the male dominance in the tech industry.

Similarly, overconfidence in AI’s decision-making abilities can lead to inadequate human oversight in critical areas like healthcare, criminal justice, and financial services, where algorithmic errors can have serious consequences for individuals.

Policy Gaps and Regulatory Challenges

Misconceptions about AI also complicate efforts to develop appropriate regulations and governance frameworks. Policymakers who overestimate AI capabilities might implement unnecessarily restrictive regulations that hamper innovation, while those who underestimate AI’s potential impacts might fail to address legitimate risks.

Effective AI governance requires a nuanced understanding of both the technology’s capabilities and its limitations. Without this understanding, we risk creating regulatory frameworks that either fail to protect against real harms or unnecessarily constrain beneficial applications.

Public Anxiety vs. Realistic Concerns

Media portrayals of artificial intelligence often emphasize either utopian or dystopian extremes, contributing to public confusion about the technology’s actual capabilities and risks. This can lead to misplaced anxiety about science-fiction scenarios while overlooking more immediate concerns.

We should be concerned about AI’s impact not because it will become sentient and take over, but because we’re already giving algorithms control over important decisions without fully understanding how they work.

A more realistic understanding of AI would help direct public attention toward pressing issues like algorithmic bias, privacy implications, and the economic impacts of automation—areas where informed public engagement is essential for developing responsible approaches to AI development and deployment.

The Need for Nuanced AI Education

Addressing artificial intelligence misconceptions requires a commitment to more nuanced education about what AI is, how it works, and its appropriate applications. This education needs to reach beyond technical specialists to include business leaders, policymakers, and the general public.

Moving Beyond Simplistic Narratives

Understanding AI requires moving beyond both hype and fear to develop a more realistic assessment of the technology’s capabilities and limitations. This means acknowledging that AI systems are powerful tools that can enhance human capabilities in specific domains while recognizing they lack the general intelligence and judgment that characterize human cognition.

Think of AI as being like a highly specialized instrument rather than a general-purpose brain. A telescope dramatically enhances our ability to see distant objects but is useless for hearing sounds. Similarly, AI systems excel at specific tasks within their design parameters but lack the versatility of human intelligence.

Developing AI Literacy

Just as digital literacy became essential in the information age, AI literacy is becoming crucial for effective participation in a world increasingly shaped by algorithmic systems. This doesn’t mean everyone needs to understand the technical details of neural networks, but rather that people should develop a basic understanding of:

- How AI systems learn from data and the implications of this for bias and accuracy

- The difference between narrow AI (designed for specific tasks) and general intelligence

- The importance of human oversight and intervention in AI-supported decision-making

- How to evaluate claims about AI capabilities critically

Educational institutions, industry leaders, and media organizations all have roles to play in fostering this broader AI literacy.

Conclusion: Beyond the Myths

Understanding artificial intelligence accurately is not just an academic exercise—it’s essential for making informed decisions about how we develop, deploy, and govern these powerful technologies. By moving beyond common misconceptions, we can have more productive conversations about both the opportunities and challenges AI presents.

The danger isn’t AI itself—it’s using it as a shortcut for critical thinking. As AI becomes increasingly integrated into our lives and work, the ability to distinguish between realistic capabilities and overblown claims will only grow more important. By developing a more nuanced understanding of what AI can and cannot do, we can harness its potential while mitigating its risks.

The future of AI will be shaped not by the technology alone, but by how we choose to use it. And those choices should be informed by clear-eyed understanding rather than misconceptions.

Want to Separate AI Fact from Fiction?

Download our free guide “The Practical Guide to AI: Beyond the Myths” to develop a clearer understanding of what AI can and cannot do for your business or career.

Evaluate AI Claims With Confidence

Download our “AI Claims Evaluation Checklist” to help you assess artificial intelligence technologies and separate realistic capabilities from hype.